Being Human in the Age of AI

A personal reflection on creativity, trust, and machine-made 'magic'

Today I’m going to talk about AI. You know: the technology that, among other things, allows you to take a piece of postal marketing like this:

…and improve it like this:

All within the space of about fifteen seconds.

Specifically, I want to focus on the psychological impact that AI might have on how we think, feel, and value our own humanity.

We'll therefore set aside the looming issues of job losses, deepfakes, disinformation, and a crumbling sense of what's real – all warnings raised by Geoffrey Hinton, often dubbed the Godfather of AI, back in 2023.

We'll also look past the unsustainable energy demands of AI, and the fact that it's outpacing our ability to manage it, like a stag party in an air traffic control room. We'll save all that for another glorious day.

Today, I want to discuss a different kind of existential risk: that AI makes the world more vanilla. That we stop appreciating and respecting the hard work involved in human creativity, and lose our sense of value for the messy, beautiful imperfections that make human expression and life what they are.

The possible result of this? A 'super-optimised world' that's streamlined, polished, and strangely hollow. And, perhaps most concerningly, one where we don't trust what we're seeing, reading, or even feeling.

That's what I want to explore here. The purpose of this article isn't to deliver answers (if only), but to spark thought and debate. I'm not claiming to be right, I'm simply sharing how I feel.

Let me tell you a story

In March this year, my sister-in-law invited me to an evening painting class. I felt a little nervous, having not picked up a paintbrush since my GCSE art class thirty years earlier. I didn't want to end up producing something that looked like it was painted by a six-year-old. Inside my head, the same conversation kept playing out:

Me: What do you think?

Friend: That's nice. Did your daughter paint it?

Me: 😳

Despite feeling a little apprehensive, I went along, and I really enjoyed it. By the end, I was quietly pleased with how my painting had turned out:

Being a perfectionist, there were a few elements I liked and some (many) I didn't. Then came a thought: how might my picture look if I asked ChatGPT to take it and 'improve it'? So, in the spirit of experimentation and self-sabotage, I decided to find out.

I uploaded my image to ChatGPT, gave it a simple prompt, and sat back to wait. After fifteen seconds of feeling like I'd just fed my self-esteem into a shredder, an image popped up on my screen:

It's good, isn't it? If you ignore the fact that there’s no door (surely a fire hazard?)

In less time than it takes to say, “Is that a shooting star or a malfunctioning SpaceX satellite?”, ChatGPT had created something far more advanced than I had produced in two hours. And it would take me many years of regular practice, hard work and dedication to match this skill level, as a human.

I think it's worth taking a moment to look at how we arrived at this point.

ChatGPT and the rise of AI

ChatGPT first came to prominence in late 2022, and the general reaction from users was one of astonishment. Since then, it – and a growing crowd of other AI tools – has rapidly embedded itself into everyday life.

These tools can assist with everything from coding and admin tasks to content creation, brainstorming, language translation, and more. I use ChatGPT myself: as a personal assistant, to brainstorm, to help improve my grammar, and to create alternative Mr Men characters…

Since its launch, ChatGPT has evolved rapidly, drawing on vast datasets and patterns in human language to improve its responses. It can now write essays, generate jokes, debug code, create photorealistic images, help plan your wedding (or your latest round of impulsive trade tariffs) and even mimic Shakespeare. Yes, Shakespeare’s back, and he’s writing product descriptions for air fryers.

For an increasing number of tasks, ChatGPT can perform at a level that feels highly competent, sometimes even exceptional. While this sounds impressive, I think there are some big questions hanging over it. Let's start with this one:

Are we going to slowly lose our appreciation for what it means to be human?

Trying to be perfect

I could argue we've been treading the path of perfection for a while now. Take photography as an example. A decade or two ago, we might have been manually Photoshopping our photos on a desktop computer. These days, we carry a computer in our pocket that can edit our photos in moments, at the touch of a button.

Since the Instagram era began, people have been doctoring the narrative of their lives on social media to suit how they want other people to see them. From adding filters and 'improving' photographs taken on our smartphones to selectively sharing the best moments and ignoring the rest, we’ve been airbrushing our reality for public display.

While there's nothing inherently wrong with this, it has taken us in a new direction. Yes, we’ve been editing images for decades, but AI makes the process instant, frictionless, and disturbingly convincing. It can add, remove and move elements, change backgrounds, alter facial expressions, and stitch together the 'ultimate' photograph of a moment that never actually happened.

"Remember that time we surfed on a blue whale?"

(Kid's been eating too many sweets!)

Writing is another area where we’ve leaned on technology for years. We've used computer software to help us improve our work, from grammar checkers to style editors. These days, it's common to rely on software like Grammarly or Hemingway to refine our writing and suggest clearer ways of expressing what we're trying to say. I use Grammarly myself.

When it comes to video, Google recently released Veo 3, marking a significant leap forward. In just two years, AI has progressed from generating rudimentary clips, like the viral "Will Smith eating spaghetti" video, to producing content that's nearly indistinguishable from real footage. Veo 3 can create high-definition videos complete with synchronised dialogue, sound effects, and music, all from simple text prompts.

One might argue that AI is simply taking creative assistance to a higher level, building on the assistance we're already using. And, there’s no doubt there can be genuine skill in using it effectively. The problem is, it’s equally possible to get it to do all of the work for you, like a magic button for creation. And that leaves us at risk of transforming human creativity into something less personal, less meaningful and far more lazy and manufactured.

The over-reliance on AI has contributed to what critics are calling "AI slop". Think of it as the spam of the 2020s, but slightly less meaty. Like gruel pretending to be crème brûlée. Crème Gruelée, perhaps? The term refers to the deluge of low-effort, AI-generated content that is now saturating the internet.

AI slop comes in many forms, from generic blog articles to spammy videos and misleading images. Rather than being created to inform or inspire, it’s instead produced to mislead and to game search engines and social media algorithms with the aim of generating eyeballs, clicks and ad revenue.

The result is a degradation of the internet into a landscape cluttered with material that lacks authenticity and depth, making it harder for genuine human creativity to stand out. Like a handwritten letter buried under a mountain of junk mail.

Beyond the volume of content, there are some real question marks over what this could mean for the craft and expression that sit at the heart of human creativity.

AI and creativity

As humans, we are the most creatively expressive species on the planet. Our brains are capable of composing breathtaking music, painting stunning works of art (present company excluded), and telling stories that move others to tears. But I fear AI could be a looming threat to this.

When it comes to creative work, AI can now write jokes that make you laugh, imitate famous authors, and generate content on almost any subject. It can compose music, produce visually stunning digital art, and mimic the styles of great artists. And it's constantly learning and improving, trained on the most brilliant pieces of creative output ever produced. Crucially, it's setting a high bar for humans to live up to.

So, what happens when we, as humans, spend hours creating something that just doesn't seem... as good? Not only to us, but to others. Suddenly, a piece of work that might once have seemed remarkable by human standards might feel merely... ordinary.

Imagine, for a moment, being surrounded by masterful artists and songwriters; by the most talented writers with the most expansive vocabularies and razor-sharp wit. Your own writing, your own art, might suddenly seem amateur by comparison.

As people with an innate need for validation and recognition, it might be very easy to think, What’s the point? What’s the point in putting in all those hours of work to become a master of your craft, if excellence no longer stands out and AI can just replicate it?

Of course, this isn’t just a personal or creative issue. Entire industries are restructuring around AI, and we’re starting to see the consequences for jobs and livelihoods.

Some people are seeing this storm coming and want to fight back against it. But this brings us to the next significant psychological problem we face with the growing use of AI.

Distrust

Lately, I’ve caught myself doing something I never used to do when looking at online and offline content. I've found myself pausing and instinctively wondering how much human involvement there was. Did a human create this? Did they really put effort into it, or did AI do most of the work? I don't think I'm alone in having that reaction.

We can often look for clues, and sometimes there are obvious ones. But AI is continually evolving, which means that the line between AI-created content and human-created content is becoming increasingly blurred. And this has the potential to push us towards distrust and uncertainty.

To me, this is a growing personal concern, but also a much broader societal one. If we lose the ability to know what to trust, the implications extend far beyond creativity. This is, I feel, a subject for another article, but it's worth mentioning here because trust underpins how society functions, and we're already seeing the consequences of social media disinformation, profit-driven algorithms, and media manipulation.

I've observed in myself that if I believe something was created entirely by AI, with little human effort and no disclosure, I feel uneasy. Subconsciously, it feels like a kind of deceit, as if someone is passing something synthetic off as real.

But here's the issue: I can't know for sure. Did AI create the whole thing for them, or did it just polish it? How much human effort went into what I'm seeing? Is this 10% human-created? 20%? 90%?

That brings us to an even harder question to answer: where exactly is the line? If AI “helps” me write better, is the outcome still mine? I'd argue it is, but there must be a line somewhere. If we leave that line to human judgment, it could get very blurry. Like handing a child a big box of doughnuts and telling them to be sensible. You know full well what’s going to happen.

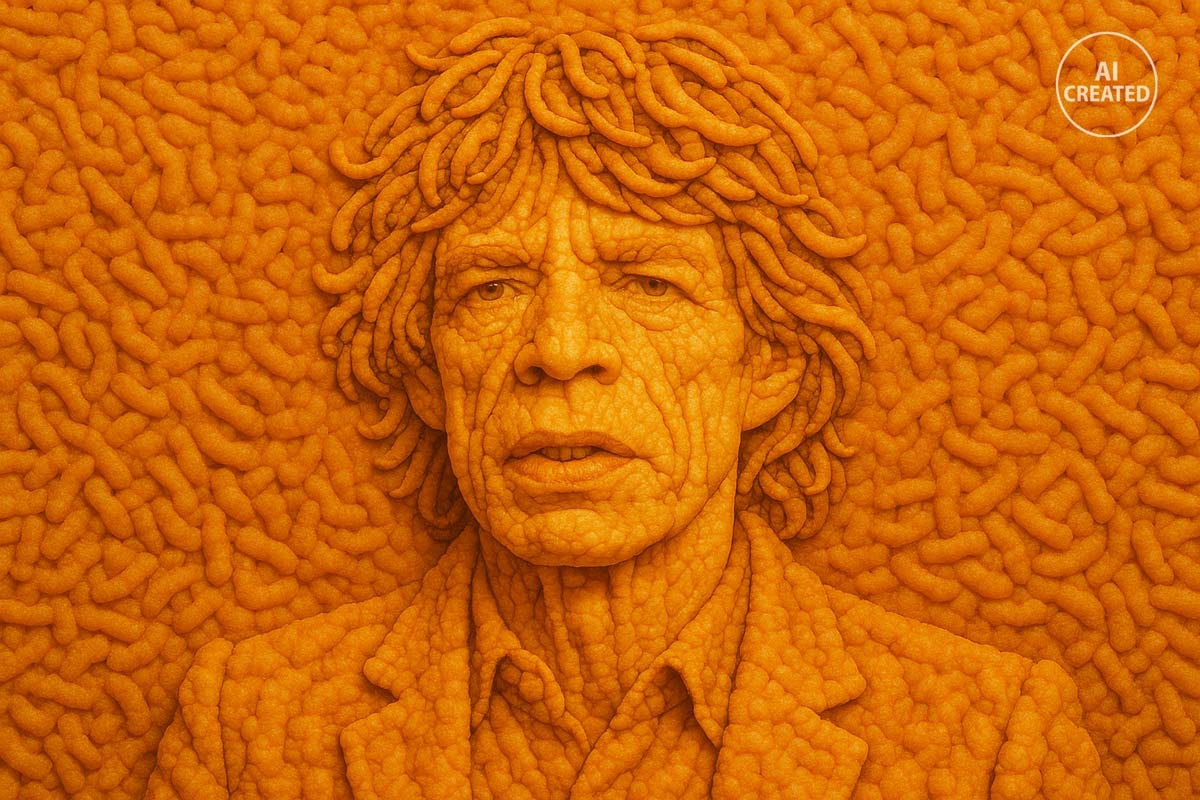

If we can’t rely on creators or software to stick to an acceptable-use limit, it leaves the door open to interpretation, and the judgment falls to the audience instead. But, in the case of a digital image, how can the rest of us tell how much effort went in? How do we know whether someone spent hours writing prompts to create their piece of art or simply said, “create me a portrait of Mick Jagger made entirely of Wotsits”?

All of this leaves us with a problem. If we want to push back against the overuse of AI, how can we do that when we don't know for sure what is and isn’t AI-created, and to what extent?

Final thoughts

There's no denying that AI brings benefits, particularly in diagnostics and medical research, in helping to combat climate change, and in other uses that genuinely serve humanity and the planet. I'm under no illusion that these are significant. It's also useful as a tool to aid human creation, to do tasks that enable us to become better creators ourselves.

I feel somewhat conflicted because I find AI useful as an assistant in my work and thought process, and I see its benefits. But the danger comes when we overuse it. If AI-created content becomes too abundant and wrapped up in our lives, human content may no longer stand out, instead blending into a sea of machine-made sameness.

Right now, I worry that AI is being overused in areas where human input still matters most. It's becoming like Pandora's Box, and we're starting to see AI used in ways that risk undermining creativity, meaning, and our deeper human needs.

Maybe part of the answer lies in transparency. It might be idealistic, but if content generated by AI were labelled as such, we could make our own judgements. Right now, work created with minimal human input or effort is being presented as if fully human-made, implying significant effort and time went into it, when it likely didn't. I think we, as humans, want to know that we’re admiring human creativity as human creativity. Because without that, something essential is lost: authenticity.

What does this mean for the future? Well, if we don't give more thought to how we're using AI, and confront the wider dangers it poses, maybe the best place for it is in the bin, along with that picture.

Alastair

P.S: While writing this article, I've often questioned whether I'm being a hypocrite. I do, after all, use ChatGPT as a creative tool, and I've used it to support me in writing this article (and to help create some of the images used within it). But I don't think my article is anti-AI. I'd say it's anti-overuse, and pro-human. If AI is used as a tool to support human creativity, rather than replace it, then I think it can be a good thing. Like a musical instrument in the hands of a musician, it's always the person who gives it meaning.

If you enjoyed this article, please consider giving it a like and sharing it with any other sentient beings who you think might enjoy it.

You can also subscribe for free below if you’d like my next article to sneak politely into your inbox.

Absolutely love this article - even though its content is accurately worrying. Still chuckling at the metaphors of "A stag party in an Air Traffic Control room" and Mr Death :)