In my previous article, we considered how AI is changing human creativity, making it more polished, more “perfect,” and perhaps more hollow. Today, we’re going to look at living in a world where truth is being manipulated by new technologies, making it increasingly difficult to discern.

We’ll discuss how AI is blurring the lines between real and fake, with people increasingly believing things that aren't real, and, perhaps more worryingly, dismissing things that are. And we'll explore how our minds are being subtly shaped by the harmful combination of social media and AI.

But before we get into that, let me begin with a story.

Back in 2005, I was on safari in the Kruger National Park in South Africa, staring out at a pride of young lions as they stood ahead of our vehicle. Something in the distance had caught their attention. Whilst they gazed into the savanna, I noticed a speed sign positioned just to one side of them. Chuckling to myself, I grabbed my camera and captured the moment.

When I arrived back home, I entered the photograph into a local photographic competition – entirely for fun. When it came to judging time, the judge looked very closely at my photograph, speculated loudly that the sign might have been Photoshopped in, and swiftly moved on to the next entry.

I felt frustrated and, to be honest, a bit insulted.

After all, if I were going to Photoshop a sign in, I would have chosen something like this…

The judge had struggled to determine whether my genuine photograph was authentic or fake. So, he erred on the side of caution, perhaps not wanting to be made to look foolish.

Back in 2005, altering photographs required time, effort, and skill – three things I was famously short on. So, my image was entirely genuine.

Back to the future

Fast-forward twenty years, and we find ourselves plugged into a hyper-digital reality where photorealistic images and videos can be conjured up in less time than it takes to say "Hakuna Matata". All thanks to AI software like Midjourney.

Naturally, this is creating some growing problems. Firstly, since the launch of AI content generators, the internet has been deluged with AI slop (mass-produced, low-quality AI content). This is making it increasingly difficult for human-generated content to stand out – something I explored in my previous article. Pinterest offers a prime example of this, now dominated by AI-generated pins. Many users have dubbed this the Enshittification of Pinterest.

Side note: Enshittification was chosen as the Macquarie Dictionary’s Word of the Year for 2024. Oxford chose ‘brain rot’, which is clearly ridiculous because it’s two words. ‘Brain rot’ beat off competition from ‘slop’, which was nominated because of the sharp rise in usage for describing exactly the kind of AI output we’re discussing here.

Of course, the sheer volume of AI slop being produced is not the only concerning aspect of this new technological age; there's another significant problem to consider. As the internet becomes flooded with high-quality, photo-realistic AI-generated images and videos, it's becoming increasingly hard for us, as human beings with little experience of this kind of synthetic content, to distinguish between what is real and what is fake.

Now, let’s be clear – fakery is not a brand-new problem – we have, after all, endured at least ten years of stock images featuring grinning strangers pointing at things that don’t exist.

But fakery has reached a new level of scale and sophistication since the arrival of AI, with potentially more harmful consequences. And nowhere has this manipulative content been spreading more rampantly than in the great digital cesspool of social media.

Of course, misinformation has been a problem on social media ever since its inception (in the bowels of Mordor), with posts misrepresenting stories and situations. The difference now is the sheer scale of it, as we face the unholy alliance of revenue-focused algorithms, the absence of oversight and, now, the development of AI.

When it comes to AI, machine learning models have rapidly reached an extraordinary level of creative mimicry, making it easier than ever to distort the truth and mislead. The sheer realism of AI-generated visuals means that falsehoods can be presented in ways that are far more convincing than ever before.

Why is this development in creative media so important?

Numerous scientific studies have suggested that humans are particularly susceptible to the influence of images and video because our perception is wired for pictures. Our brains process visual information far more quickly than text, and we tend to remember pictures more easily than words. This phenomenon is known as the picture superiority effect.

Studies have estimated that 90 per cent of the information transmitted to our brains is visual, and images are processed up to 60,000 times faster than text. This perhaps helps to explain why AI-generated visuals can be so persuasive and why misinformation, when presented in such a convincing visual form, can spread so effectively.

This psychological influence of visuals makes the current flood of AI-generated visual content particularly concerning. Consider what's happening on platforms like Facebook.

AI Slop in action

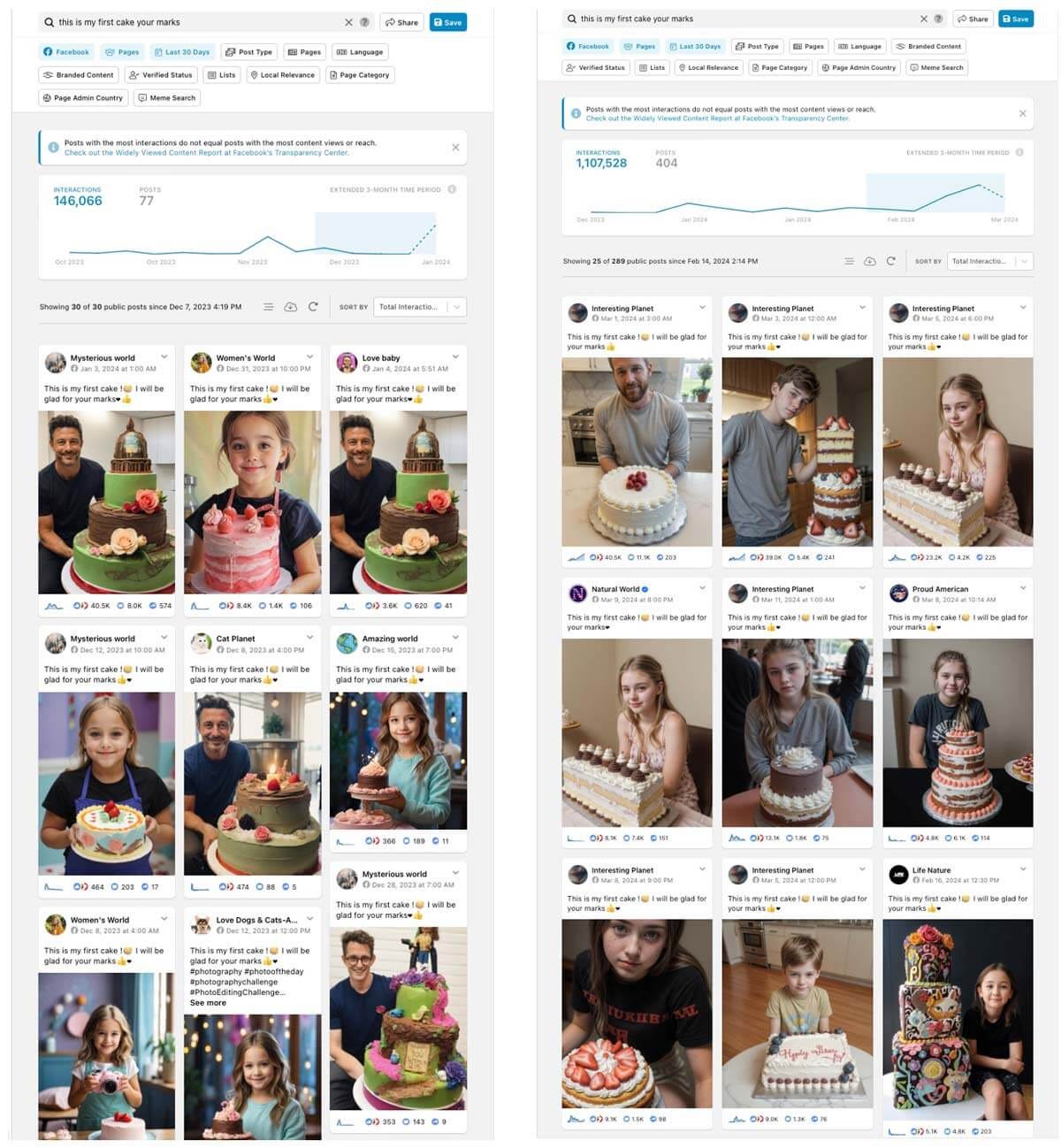

Facebook recently made the decision to remove its fact-checkers – a move that drew widespread criticism and backlash from disinformation experts, fact‑checking organisations and online-safety advocates. With the toxic combination of minimal oversight and the rapid expansion of AI, the platform is now awash with scams and clickbait. It only took me about ten seconds of scrolling my Feed to find this example...

Examine the images closely, and you can see they're AI-created. It's a fake set of AI-generated images accompanied by a nonsensical story, posted for clickbait purposes. The faces don't quite look right. But the fact is that these images are of a sufficiently high quality to deceive people – something evidenced by the number of reactions and shares on the post. The kicker is that every like, share or comment boosts the post’s perceived authenticity and drives the algorithm to show it to more people. Behold: the snowball effect.

So, we’ve mentioned the combination of AI plus algorithms. But there’s a third ingredient in the harmful mix: human nature. A 2018 study from MIT analysing millions of Twitter posts found that false news stories are 70% more likely to be shared than true stories, and spread six times faster than accurate information, not because of bots or algorithms, but because humans are more likely to pass them on.

When you combine this natural human tendency with algorithms designed to maximise engagement for ad revenue, and the ease with which realistic AI-generated content can now be produced, you have a perfect storm for an infodemic of misinformation and propaganda.

And it's worth remembering: AI is only going to get better at image and video creation – you only need to recognise how far it's come in just a couple of years to appreciate this.

Take, for example, this AI-generated image of a new Olympic event that I conceptualised back in August 2024 – Trampolining With Trifle…

And now compare it to this generated image from just a year later…

I’m now fully convinced this would be a world-class spectacle. Who wouldn’t tune in to watch this pudding pandemonium?

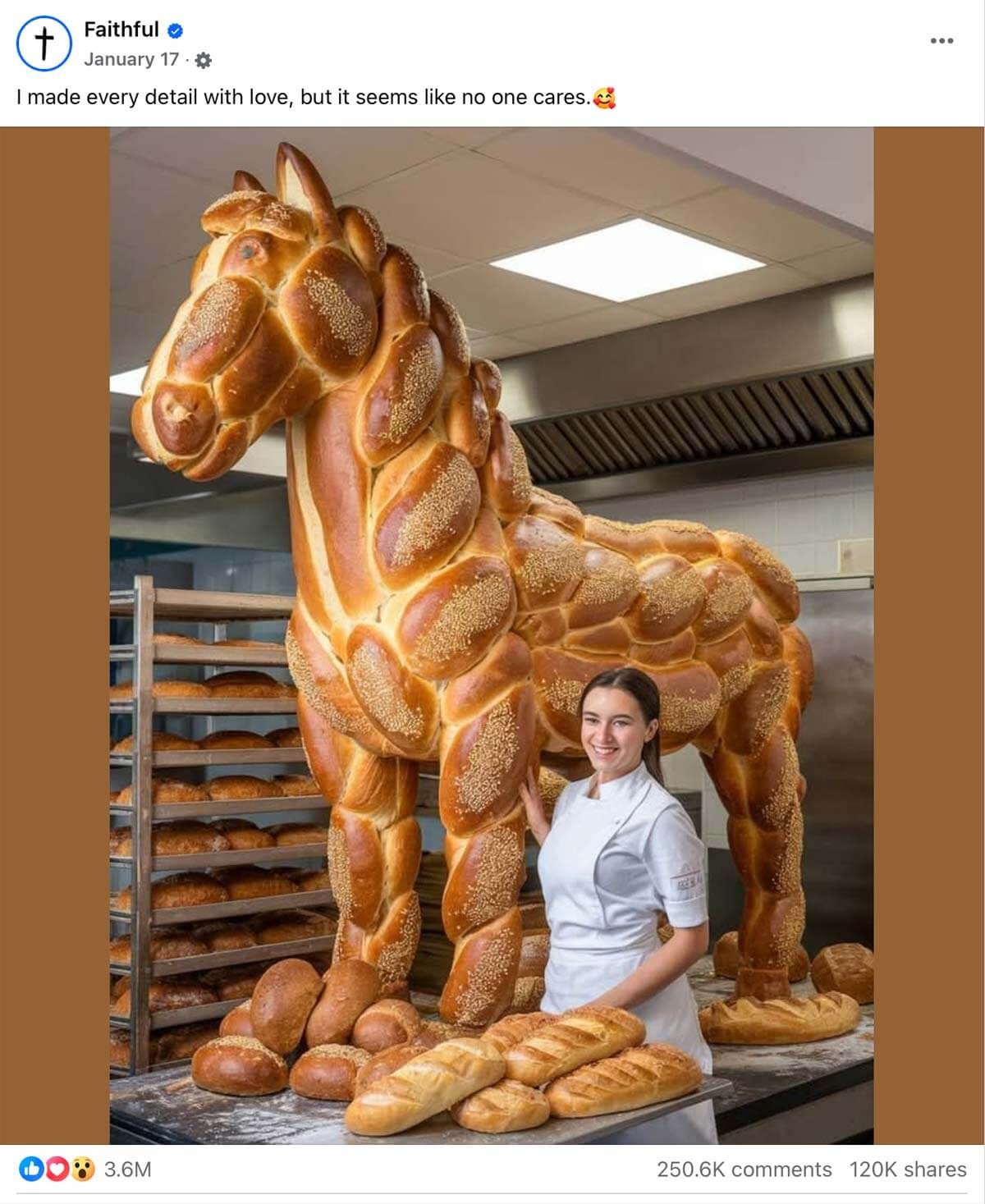

Back to Facebook (ugh, do I have to?). It’s worth taking a closer look at the sort of content that’s appearing on its platform. Meta's Q1 2025 Widely Viewed Content Report features several AI-generated images and videos in its ten most-viewed posts. This one, for example, ranks fourth in the list with 49.1 million views.

Less horse bread, more horse shit. I can’t decide whether it looks happy or just stale.

Take a second to glance at the number of reactions, comments and shares this post has received. You might be thinking, "Sure, it's fake, but isn't it just a harmless bit of fun?" Well, I’d argue it isn’t – for a couple of reasons. Let's start with the most obvious one – the intent behind it.

In a detailed August 2024 review, researchers at the Harvard Kennedy School analysed 125 Facebook Pages, each posting at least 50 AI-generated images, and found that many of these Pages were dedicated to spam or scams, with some being part of coordinated clusters operated by the same people.

The study highlighted how spam Pages used clickbait tactics to drive users to low-quality content farms, while scam Pages tried to sell non-existent products or harvest personal information.

Nothing screams 'harmless fun' quite like buying an imaginary product or handing over your personal details to a criminal, eh?

There can be a genuine threat behind these kinds of posts. And when it comes to social media companies, their algorithms are designed to keep you on their platform for as long as possible in order to maximise advertising revenue – a hallmark of the attention economy.

This means serving you more of the same type of content you've already interacted with.

Bear this fact in mind when I tell you that the Harvard Kennedy School Review found a high percentage of content appearing in users’ Feeds came from Pages they had not actually chosen to follow.

This is supported by recent data from Meta’s own Widely Viewed Content Report, which shows that in Q1 2025, 35.7% of Feed content views in the US came from ‘unconnected posts’ – up from just 8% in Q2 2021. These are recommendations of content from Pages, Groups, or people users are NOT connected to and have not actively chosen to follow.

In other words, over one-third of the content in your Facebook Feed comes from sources you never subscribed to or followed – it’s simply served up by the algorithm. This can create fertile ground for AI-generated misinformation and scams to reach a wide audience more easily, often without users realising the synthetic nature of what they are seeing.

Liked an AI post about cake?

Look forward to seeing many more of them...

The other problem

Beyond the immediate threats of scams and misinformation lies another problem – one that could have important repercussions for us as a society as we get used to living in this new world.

It's worth remembering that we're still at a stage of naivety when it comes to AI because it's such a recent development. While we've always understood the danger of believing falsehoods, there's now a danger of the opposite happening as well. That we stop knowing what or who to trust, and that we stop believing things that are true. This erodes the fabric of society, making us far more vulnerable to manipulation and cynicism – the kind that thrives in cult-like systems of control.

This leads me to something called The Liar's Dividend. It's worth understanding this concept because once you do, you'll realise just how prevalent it’s becoming in our modern world. It’s a tactic we now see regularly in politics and media, and it's probably best exemplified by the phrase 'fake news' – popularised by Donald Trump and now widely wielded as a political weapon to discredit legitimate journalism and factual reporting around the world.

The Liar's Dividend refers to the phenomenon where, in a world full of misinformation and manipulated content, individuals can simply dismiss inconvenient truths by claiming they are fake or fabricated.

All of this speaks to a broader societal shift. Quite simply, the more sophisticated our tools for creating convincing AI content become, the less trust we might start placing in anything at all. This potentially allows liars and perpetrators of bad acts to escape accountability by sowing doubt and confusion. And as you read this, you can probably recognise that this is a path we're already heading down.

It's also worth pausing to mention the "illusory truth effect"– the phenomenon where the more often you hear a statement, the more 'true' it sounds. So, repetition can make people believe things they shouldn't, regardless of their actual truth value. Now think about that in the context of social media algorithms serving you more of the same type of content you regularly interact with. You can quickly find yourself in a digital echo chamber of propaganda and unquestioning acceptance.

What does this mean for truth?

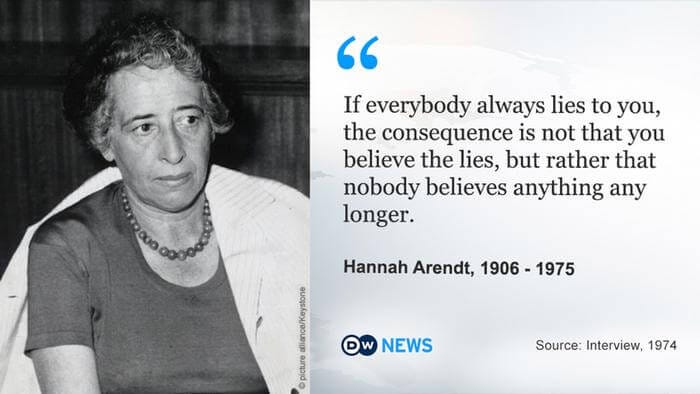

This is the deeper concern. Misinformation is just one part of the story. The greater risk is a loss of trust in everything – a point chillingly captured by political theorist Hannah Arendt in 1974: "If everybody always lies to you, the consequence is not that you believe the lies, but rather that nobody believes anything any longer."

In the new age of AI-generated content, we're seeing some concerning parallels to Arendt's observations about how constant exposure to falsehoods risks undermining people's confidence in distinguishing truth from lies. As one recent analysis noted, this has significant implications for public trust.

In a social media-saturated world governed by algorithms designed to maximise revenue, we're increasingly at risk of manipulation. As a result, we're heading towards a time where truth becomes less an objective standard and more like beauty: in the eye of the beholder.

There seems little doubt that we will have to learn – and quickly – to become better at filtering and discerning the plausibility of online content. At present, we're still in the early stages of this technology, and we remain far too naive, too trusting, and too quick to believe things we shouldn’t. A 2022 study (conducted before the AI boom) by Ofcom found that a third of internet users were unaware that online content might be false or biased, and that we commonly overestimate our ability to spot misinformation.

Education forms one part of the solution. The authors of the Harvard research suggest teaching general digital literacy best practices, such as Mike Caufield’s “SIFT” method:

Stop

Identify the source

Find better coverage

Trace claims, quotes, and media to the original context.

This approach, however, requires effort and a willingness to remain open-minded and to question and investigate posts before believing and sharing them. For those prone to confirmation bias (the tendency to favour information that confirms existing values or beliefs), this might be a big ask. Many of us are too entrenched, too reactive, and too uncomfortable with being wrong, just like the photo judge.

The other part of the solution lies in stronger regulation, not only of social media and their platforms, but also of the AI technologies that generate this content. Yet, meaningful regulatory frameworks for AI remain in their infancy, and we’ve seen very little progress when it comes to introducing controls and rules for social media companies.

So, as AI-generated slop continues to flood the internet and our social media feeds, our best defence seems to be a personal one. Remain sceptical, question what we see, and help others do the same. The need for critical thinking and proof-seeking has never been greater.

That’s right: in a world drowning in misinformation and AI slop, take a lesson from the bread-horse: never forget the importance of proof.

Alastair

If you enjoyed this article, please consider giving it a like and sharing it with any potential Trampolining With Trifle contenders.

You can also subscribe for free below if you’d like my next article to sneak politely into your inbox.

Very topical and thought provoking. I particularly feel concern at the move (subtle but there) from sceptical to cynical that is already beginning to happen. As trust is a corner stone of human relating what will the effect of this increasing cynicism on human relationships? Thank you for sharing - and by the way, the original lion photo is awesome!